What is XR (Extended Reality).?

Imagine being fully immersed in a design or experiencing a new product before its being build. Or meeting a friend face to face when they are really across the world. Extended reality or XR encompasses around virtual reality, Augmented reality, and mixed reality.

VR is an experience of fully entering into an environment to interact with virtual objects instead of viewing an interface and only imagining it.

AR Alternatively is an experience in which virtual elements are added to the real world rather than a person being immersed in a virtual world. These elements are integrated to fit appropriately in real spaces.

MR is a mix of these experiences with Virtual and Real-world elements interacting within one environment. These forms of XR are made possible with an array of devices including Mobile and VR Headsets.

Extended Reality (XR) is nothing, but an umbrella term comprises of Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR).

There could be endless applications of XR, following are a few of the major domains: –

- Entertainment & Gaming

- Healthcare

- Engineering and Manufacturing

- Food

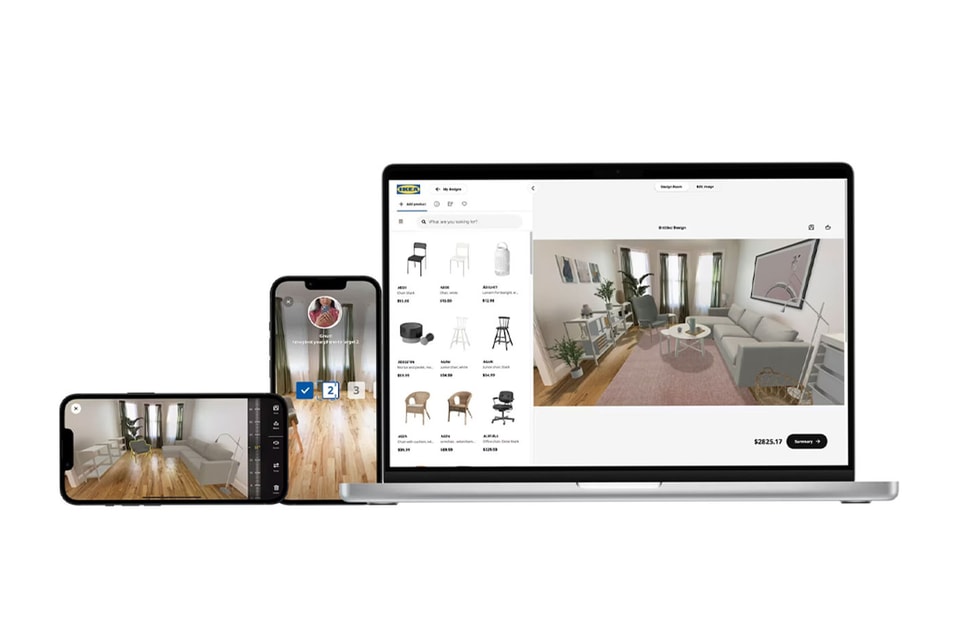

- eCommerce and Retails

- Education

- Real State

- Workspace

- Defense

- Travel and Tourism

Among them, two applications excite me the most as their practical implementations are productive and have a huge scope for further development for the betterment of the world. These two products are: –

- Ambiens

- BMW M2 Mixed Reality

1. Ambiens XR Viewer

These days, professional Architects, and real estate professionals face difficulties in explaining their projects to clients or potential investors. Convincing someone without any imagination is quite difficult, to overcome this issue, Ambiens (A Tech Creative Company) came up with an XR visualization and simulation solution where the entire house is simulated using AR and is being presented to the customer as if they can feel the actual house. Their architects and designers go to the site and create a plan of how the place will look after the construction and the same is provided to the XR developer. Now, with the help of Augmented Reality, he created a virtual design and saves its link into a bar code, and that bar code is placed at that location. So, when the customer arrives, he just scans the barcode with his mobile phone and can observe the final product in that empty space.

Features of Ambiens

In the field of Real States, Ambiens provides some features of Realistic Visualizations advantages as follows: –

- High-definition Rendering

- Video Tour

- 3-D Interactive Model and Maquette

- Augmented Reality

- 360° Interactive and Immersive Navigations

This product helps the customer to connect with Architecture and Designer’s thoughts and can make required changes as per the requirement, moreover, can feel the changes without any confusion.

Scope of Improvement

It’s a well-developed product but there are a few key points missing out there which adds a huge difference with the cost of any real-state property. Such as – “View from the Balcony”, and “Sunlight entering through the window”. Which could be added to this project if a responsive VR Simulation could have been created.

Suppose: –

- In that product, while seeing the house, you go to the balcony and see a beautiful sight of a beach or some forest. This way, customers can feel like they are physically observing the property and that mesmerizing sight makes them buy the property that instant.

- Some people like natural light and the breeze entering the house through the windows. This can’t be presented with an AR demonstration. But in a Metaverse, it can. Based on the customer’s requirements, the builder can make necessary changes.

2. BMW M2 Mixed Reality

BMW proposed a gamic experience for those customers who want to be a part of a car race with the motto of “drive the change – change to drive”. Though this concept is still not available to the public but has the potential to change the way of traditional Car Race. In this, BMW used Mixed Reality where the driver drives a real car on a real track/road but what he views is not a real-world entity. He is basically taken into a Virtual Environment of his favorite car racing game, where this gadget demonstrates himself as a gaming car racer on a racing track and exciting music. This gadget creates the racing track on the basis of the road and obstacle of the real world and maps it into a virtual world with some additional gamic graphics and sound effects. There is also a virtual reality headset, which the driver of the aforementioned BMW M2 must wear. Inside this virtual reality headset is a virtual world that is programmed by BMW M to represent something called ‘M Town’.

They have also installed a “SmartTrack” by ART which is an integrated tracking system inside the car to track the driver’s head movement. It has 2 wind-angle cameras and a controller integrated into a single housing with the compatibility to sync with other devices. Which overall corresponds to increasing the frequency and decreasing the latency for the VR application, hence resulting in more accuracy.

Features of BMW M2 Mixed Reality

Team BMW tried to provide: –

- Gamic and sporty experience with real motion, which in turn have negligible motion sickness.

- Used SmartTrack for more accuracy

- Provided 360° Navigation

- High-Definition Rendering

Scope of Improvement

It could have been implemented in a real-world traffic scenario keeping all the traffic rules and regulations in mind. Moreover, a smart, and intelligent systems can detect all roadside signposts and modify the game scenario based on that making car driving more fun and relaxing. In this way, after a long and hectic working day, one can enjoy the ride back home with a gaming experience. Moreover, the Smart system will try to make the driving experience more comfortable, safe, and enjoyable.

Conclusion

The possibilities of XR are endless so what does the future hold for extended reality? It’s expected to improve with sophisticated and more affordable hardware, faster rendering, and more detailed modeling. More industries will implement XR in more areas including education, hobbies, health, sports, and work. Eventually, people may move into a mixed reality environment in an interconnected metaverse. This might include working together with innovators, production entities, and offices everywhere to increase knowledge, invent intelligently, implement resources intelligently, and solve more intricate problems. XR extends reality in many ways. Increasing understanding of the real world and inviting people to move beyond the edges of it into something previously unimaginable.

Citation

- SmartTracker – “https://ar-tracking.com/en/product-program/smarttrack3” and “https://www.youtube.com/watch?v=fpkHovOfXdQ&ab_channel=AdvancedRealtimeTrackingGmbH%26Co.KG”

- Ambiens XR Viewer – “https://www.youtube.com/watch?v=N89oQf3V6LE&ab_channel=AmbiensVR”

- BMW M2 Mixed Reality – “https://www.bmw-m.com/en/topics/magazine-article-pool/m-mixed-reality.html” and “https://www.youtube.com/watch?v=h6dv8Xn0HJo&ab_channel=BMWBLOG” and “https://www.topgear.com/car-reviews/m2/first-drive-2”