Gameplay video: https://www.youtube.com/watch?v=IAMPr9kytfk

The Elemental Tetrad

Introduction & Story

Valheim is a 3D survival game for 1~10 players, based on the Norse mythologies where players need to gather resources, craft tools and weapons, build shelters, and finally find defeat bosses to prove themselves to the god Odin.

Mechanics

The game unfolds in a huge, dynamic and procedurally generated world free for the player to explore, while the flow of the game is sequential.

The game starts off with players at the world origin with an altar where players can give loots from each of the 6 bosses to be defeated as sacrifice to Odin. The world consists of many biomes such as dark forests, swaps and mountains. In each biome, there are different types of crafting materials and monsters. There is also one boss corresponding to each biome type.

Players can explore the world, find the bosses and defeat them, but in a sequential manner, as there are also mechanics discouraging players from going to and explore meaningfully a biome that corresponds to a later boss. For example, the Dark Forest has an abundance of copper mines, but players need to defeat the previous boss to craft their first pick-axe to do minings.

Aesthetics

In terms of graphics and modelling, the game is not great. In fact, entities in the game has very coarse 3D meshes and the animations are not impressive. However, the game has very impressive rendering and lighting effects.

However, the game has immersive background music that corresponds to the theme of each biome. In the case of players entering a “higher level biome” for the first time, there will also be eerie music sounds alerting you that you are entering a biome beyond your strength.

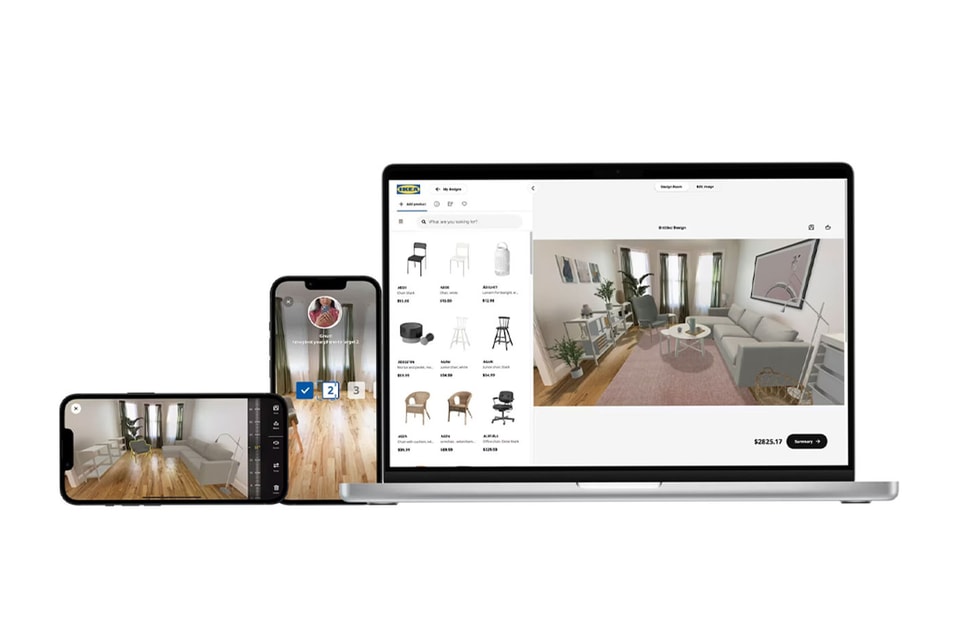

Technology

Valheim is an indie game developed by Iron Gate Studio consisting of only 5 developers. It is developed using Unity game engine. Despite many different elements and game mechanics, the game is surprisingly small (probably as a result of low mesh counts for in-game entities), as the program size is less than 1 Gigabyte, and it has very low system requirements.

Lenses

#3 Lens of Venue

The game map is randomly generated for each game, so it ensures unique gameplay each time you start with a new game character. However, different biomes have different generation criteria based on distance from world center. This ensures that new players are not challenged with different biomes when they first begin, and players are expected to travel further away from their starting point to explore as the game progresses.

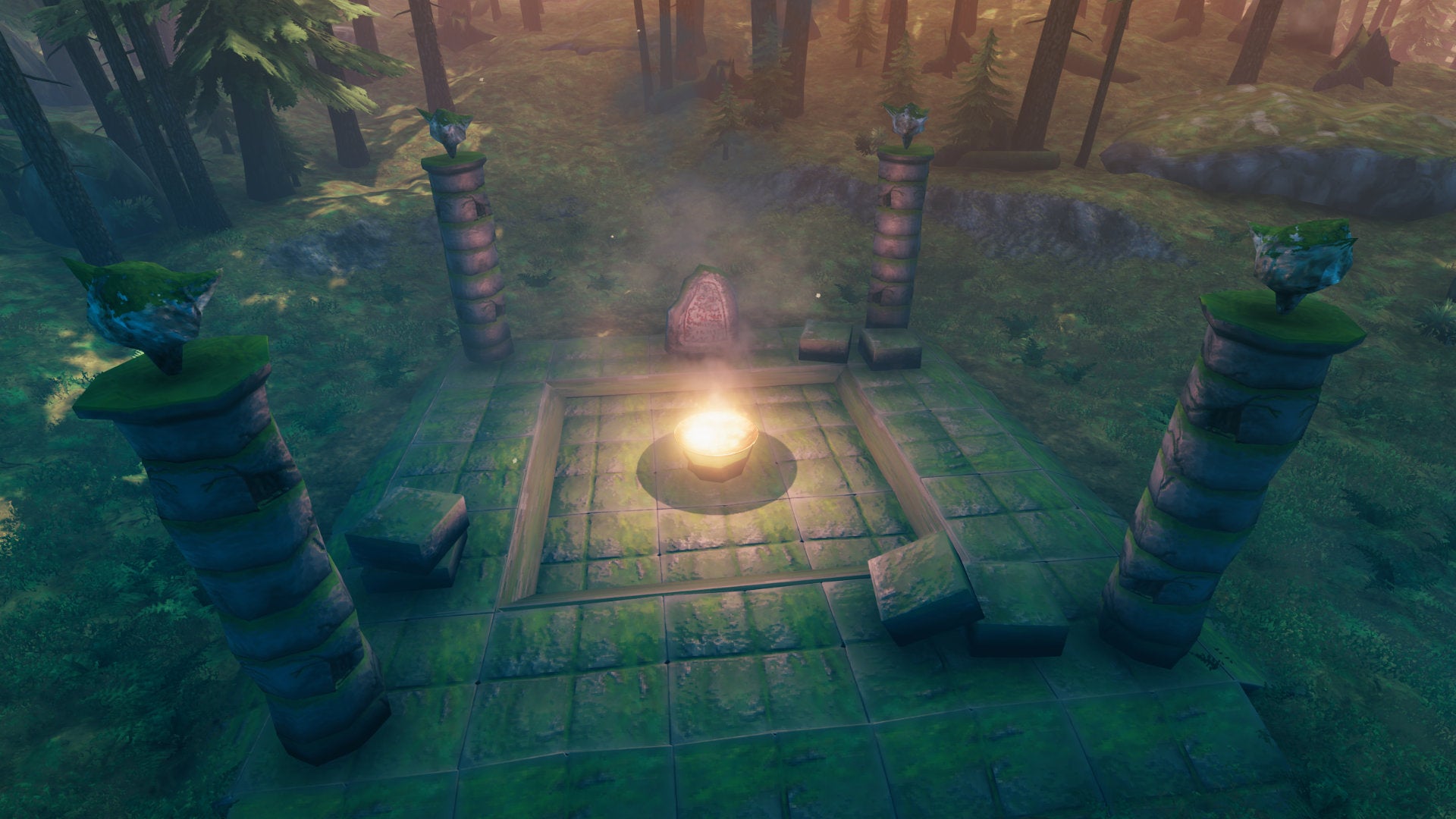

#8 Lens of Problem Solving

To defeat each boss, players need to first explore and find the altar for the boss. At the altar, players must provide a specific item available in the biome as sacrifice to summon and defeat the boss. A carved stone at the altar shows a riddle hinting the correct sacrifice item. This mechanism not only compels players to think and solve the riddle, it also encourages them to explore the biome thoroughly so that they can get access to all the items in the biome.

#35 Lens of Expected Value

When players are killed in Valheim, they lose all items in the inventory at the corpse and their combat and survival skill levels drop. They are then spawned and have to go to the corpse to get items recovered. Such punishments discourage players from entering a new biome or summon a boss before getting well-equipped. On the other hand, venturing into a new biome means getting access to new materials for crafting more powerful equipment. As a result, players need to weigh and think carefully whether they should continue upgrading their equipment in the current biome or advance the game progress.

#51 Lens of Imagination

One element that makes Valheim stands out from other survival games is its extreme level of realistic-ness. Buildings in Valheim follow real world physics principles – for example, different materials have load-bearing capacity, and you can see the load on each segment of a building through different color codes when building it. In addition, players also need to take into account things such as ventilation when setting up a fire indoor. Such principles enforce players to build realistic shelters, giving them a more immersive experience, making them feel as if they are warriors surviving in the wild themselves.

References:

- https://store.steampowered.com/app/892970/Valheim/

- https://www.gamespot.com/articles/the-valheim-viking-guide-for-beginners-how-to-best-survive-and-ascend-to-valhalla/1100-6487525/

- https://www.rockpapershotgun.com/valheim-elder-boss-fight-elder-location-how-to-summon-and-beat-the-elder

- https://www.thegamer.com/valheim-tips-recover-items-die/#restock-at-your-home-camp